Research Aims to Give Robots Human-Like Social Skills

One argument for why robots will never fully measure up to people is because they lack human-like social skills.

But researchers are experimenting with new methods to give robots social skills to better interact with humans. Two new studies provide evidence of progress in this kind of research.

One experiment was carried out by researchers from the Massachusetts Institute of Technology, MIT. The team developed a machine learning system for self-driving vehicles that is designed to learn the social characteristics of other drivers.

The researchers studied driving situations to learn how other drivers on the road were likely to behave. Since not all human drivers act the same way, the data was meant to teach the driverless car to avoid dangerous situations.

The researchers say the technology uses tools borrowed from the field of social psychology. In this experiment, scientists created a system that attempted to decide whether a person's driving style is more selfish or selfless. In road tests, self-driving vehicles equipped with the system improved their ability to predict what other drivers would do by up to 25 percent.

In one test, the self-driving car was observed making a left-hand turn. The study found the system could cause the vehicle to wait before making the turn if it predicted the oncoming drivers acted selfishly and might be unsafe. But when oncoming vehicles were judged to be selfless, the self-driving car could make the turn without delay because it saw less risk of unsafe behavior.

Wilko Schwarting is the lead writer of a report describing the research. He told MIT News that any robot working with or operating around humans needs to be able to effectively learn their intentions to better understand their behavior.

"People's tendencies to be collaborative or competitive often spill over into how they behave as drivers," Schwarting said. He added that the MIT experiments sought to understand whether a system could be trained to measure and predict such behaviors.

The system was designed to understand the right behaviors to use in different driving situations. For example, even the most selfless driver should know that quick and decisive action is sometimes needed to avoid danger, the researchers noted.

The MIT team plans to expand its research model to include other things that a self-driving vehicle might need to deal with. These include predictions about people walking around traffic, as well as bicycles and other things found in driving environments.

The researchers say they believe the technology could also be used in vehicles with human drivers. It could act as a warning system against other drivers judged to be behaving aggressively.

Another social experiment involved a game competition between humans and a robot. Researchers from Carnegie Mellon University tested whether a robot's "trash talk" would affect humans playing in a game against the machine. To "trash talk" is to talk about someone in a negative or insulting way usually to get them to make a mistake.

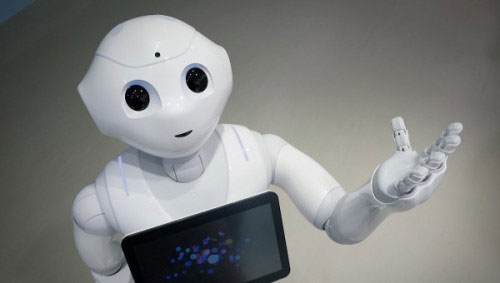

A humanoid robot, named Pepper, was programmed to say things to a human opponent like "I have to say you are a terrible player." Another robot statement was, "Over the course of the game, your playing has become confused."

The study involved each of the humans playing a game with the robot 35 different times. The game was called Guards and Treasures which is used to study decision making. The study found that players criticized by the robot generally performed worse in the games than humans receiving praise.

One of the lead researchers was Fei Fang, an assistant professor at Carnegie Mellon's Institute for Software Research. She said in a news release the study represents a departure from most human-robot experiments. "This is one of the first studies of human-robot interaction in an environment where they are not cooperating," Fang said.

The research suggests that humanoid robots have the ability to affect people socially just as humans do. Fang said this ability could become more important in the future when machines and humans are expected to interact regularly.

"We can expect home assistants to be cooperative," she said. "But in situations such as online shopping, they may not have the same goals as we do."

I'm Bryan Lynn.