The fields of artificial intelligence and machine learning are moving so quickly that any notion of ethics is lagging decades behind, or left to works of science fiction.

з”ұдәҺдәәе·ҘжҷәиғҪе’ҢжңәеҷЁеӯҰд№ йўҶеҹҹеҸ‘еұ•еҫ—еӨӘиҝ…йҖҹпјҢд»ҘиҮҙдәҺд»»дҪ•дјҰзҗҶжҰӮеҝөйғҪж»һеҗҺеҮ еҚҒе№ҙпјҢжҲ–жҳҜз•ҷз»ҷдәҶ科幻дҪңе“ҒгҖӮ

This might explain a new study out of Shanghai Jiao Tong University, which says computers can tell whether you will be a criminal based on nothing more than your facial features.

иҝҷд№ҹи®ёиғҪеӨҹи§ЈйҮҠдёҠжө·дәӨйҖҡеӨ§еӯҰзҡ„дёҖйЎ№ж–°з ”з©¶гҖӮиҜҘз ”з©¶иЎЁжҳҺпјҢи®Ўз®—жңәеҸӘйңҖж №жҚ®дҪ зҡ„йқўйғЁзү№еҫҒе°ұиғҪеҲҶиҫЁеҮәдҪ жҳҜеҗҰжҳҜдёҖдёӘзҪӘзҠҜгҖӮ

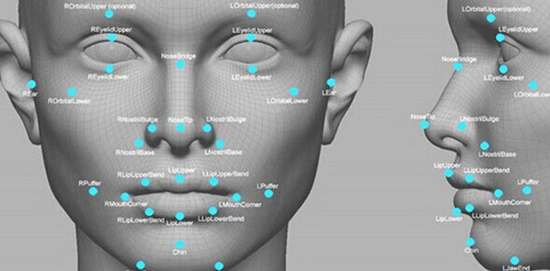

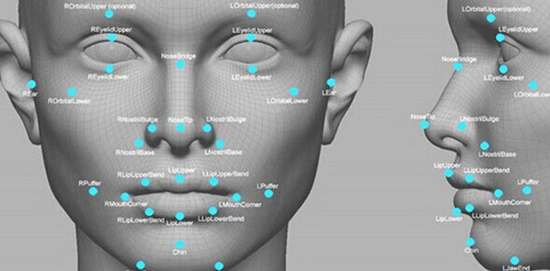

In a paper titled "Automated Inference on Criminality using Face Images," two Shanghai Jiao Tong University researchers say they fed "facial images of 1,856 real persons" into computers and found "some structural features for predicting criminality, such as lip curvature, eye inner corner distance, and the so-called nose-mouth angle."

еңЁдёҖзҜҮйўҳдёәгҖҠеҹәдәҺйқўйғЁеӣҫеғҸзҡ„иҮӘеҠЁзҠҜзҪӘжҰӮзҺҮжҺЁж–ӯгҖӢзҡ„ж–Үз« дёӯпјҢдёӨдҪҚдёҠжө·дәӨйҖҡеӨ§еӯҰзҡ„з ”з©¶дәәе‘ҳиЎЁзӨәпјҢ他们е°Ҷ"1856дёӘзңҹдәәзҡ„йқўйғЁеӣҫеғҸ"еҪ•е…Ҙи®Ўз®—жңәпјҢеҸ‘зҺ°"дёҖдәӣиғҪеӨҹйў„жөӢзҠҜзҪӘзҺҮзҡ„з»“жһ„зү№еҫҒпјҢдҫӢеҰӮдёҠе”ҮжӣІзҺҮгҖҒеҶ…зңји§’й—ҙи·қе’Ңйј»е”Үи§’и§’еәҰгҖӮ"

They conclude that "all classifiers perform consistently well and produce evidence for the validity of automated face-induced inference on criminality, despite the historical controversy surrounding the topic."

他们зҡ„з»“и®әжҳҜпјҡ"е°Ҫз®ЎиҜҘдё»йўҳдёҖзӣҙе…·жңүеҺҶеҸІдәүи®®пјҢдҪҶжҳҜжүҖжңүзҡ„еҲҶзұ»еҷЁйғҪиЎЁзҺ°еҮәиүІпјҢ并дёәдәәи„ёиҜҶеҲ«жҠҖжңҜиҫЁи®ӨзҪӘзҠҜзҡ„жңүж•ҲжҖ§жҸҗдҫӣдәҶиҜҒжҚ®гҖӮ"

In the 1920s and 1930s, the Belgians, in their role as occupying power, put together a national program to try to identify individuals' ethnic identity through phrenology, an abortive attempt to create an ethnicity scale based on measurable physical features such as height, nose width and weight.

еңЁ20дё–зәӘ20е№ҙд»ЈеҸҠ30е№ҙд»ЈпјҢжҜ”еҲ©ж—¶дәәд»ҘеҚ йўҶеӣҪзҡ„иә«д»ҪеҲ¶е®ҡдәҶдёҖйЎ№еӣҪ家计еҲ’пјҢиҜ•еӣҫйҖҡиҝҮйӘЁзӣёжқҘиҜҶеҲ«дёӘдәәзҡ„ж°‘ж—Ҹзү№жҖ§пјҢиҜ•еӣҫж №жҚ®еҸҜжөӢйҮҸзҡ„иә«дҪ“зү№еҫҒпјҢеҰӮиә«й«ҳгҖҒйј»еӯҗе®ҪеәҰе’ҢйҮҚйҮҸпјҢжқҘеҲ’еҲҶдёҖдёӘзҡ„з§Қж—ҸиҢғеӣҙгҖӮ

The study contains virtually no discussion of why there is a "historical controversy" over this kind of analysis вҖ” namely, that it was debunked hundreds of years ago.

жӯӨйЎ№з ”з©¶еҮ д№ҺжІЎжңүи®Ёи®әдёәд»Җд№Ҳиҝҷз§ҚеҲҶжһҗжңүдёҖдёӘ"еҺҶеҸІдәүи®®"пјҢе®ғеңЁеҮ зҷҫе№ҙеүҚе°ұиў«жҸӯз©ҝдәҶгҖӮ

Rather, the authors trot out another discredited argument to support their main claims: that computers can't be racist, because they're computers.

зӣёеҸҚпјҢдҪңиҖ…жҸҗеҮәдәҶеҸҰдёҖдёӘеҸҜдҝЎзҡ„и®әзӮ№жқҘж”ҜжҢҒ他们зҡ„дё»иҰҒи®әж–ӯпјҡи®Ўз®—жңәдёҚиғҪжҲҗдёәз§Қж—Ҹдё»д№үиҖ…пјҢеӣ дёәе®ғ们жҳҜи®Ўз®—жңәгҖӮ

Unlike a human examiner/judge, a computer vision algorithm or classifier has absolutely no subjective baggages, having no emotions, no biases whatsoever due to past experience, race, religion, political doctrine, gender, age, etc.

дёҺдәәзұ»жЈҖжҹҘе‘ҳ/жі•е®ҳдёҚеҗҢпјҢи®Ўз®—жңәи§Ҷи§үз®—жі•жҲ–еҲҶзұ»еҷЁз»қеҜ№жІЎжңүдё»и§ӮзңӢжі•гҖҒжІЎжңүжғ…з»ӘгҖҒжІЎжңүз”ұдәҺиҝҮеҺ»з»ҸйӘҢгҖҒз§Қж—ҸгҖҒе®—ж•ҷгҖҒж”ҝжІ»дҝЎжқЎгҖҒжҖ§еҲ«гҖҒе№ҙйҫ„зӯүиҖҢйҖ жҲҗзҡ„еҒҸи§ҒгҖӮ

Besides the advantage of objectivity, sophisticated algorithms based on machine learning may discover very delicate and elusive nuances in facial characteristics and structures that correlate to innate personal traits.

йҷӨдәҶе®ўи§ӮжҖ§зҡ„дјҳеҠҝпјҢеҹәдәҺжңәеҷЁеӯҰд№ зҡ„еӨҚжқӮз®—жі•еҸҜиғҪеҸ‘зҺ°йқўйғЁзү№еҫҒе’Ңз»“жһ„дёӯйқһеёёеҫ®еҰҷе’Ңйҡҫд»ҘжҚүж‘ёзҡ„з»Ҷеҫ®е·®еҲ«пјҢиҝҷдәӣз»Ҷеҫ®е·®еҲ«дёҺе…ҲеӨ©зҡ„дёӘдәәзү№еҫҒзӣёе…ігҖӮ